The AI Coding Reality Check

Understanding the Security Risks

Welcome back! In the last chapter, we explored why AI coding assistants have become so popular. The productivity gains are real, the adoption is widespread, and developers love these tools.

Now it's time for the reality check.

While AI coding assistants offer incredible benefits, they also introduce serious security risks that most teams aren't prepared for. The same factors that make these tools powerful — training on massive public codebases, generating code without human oversight, operating at speed — also make them dangerous when used without proper guardrails.

Let's dive into what can go wrong.

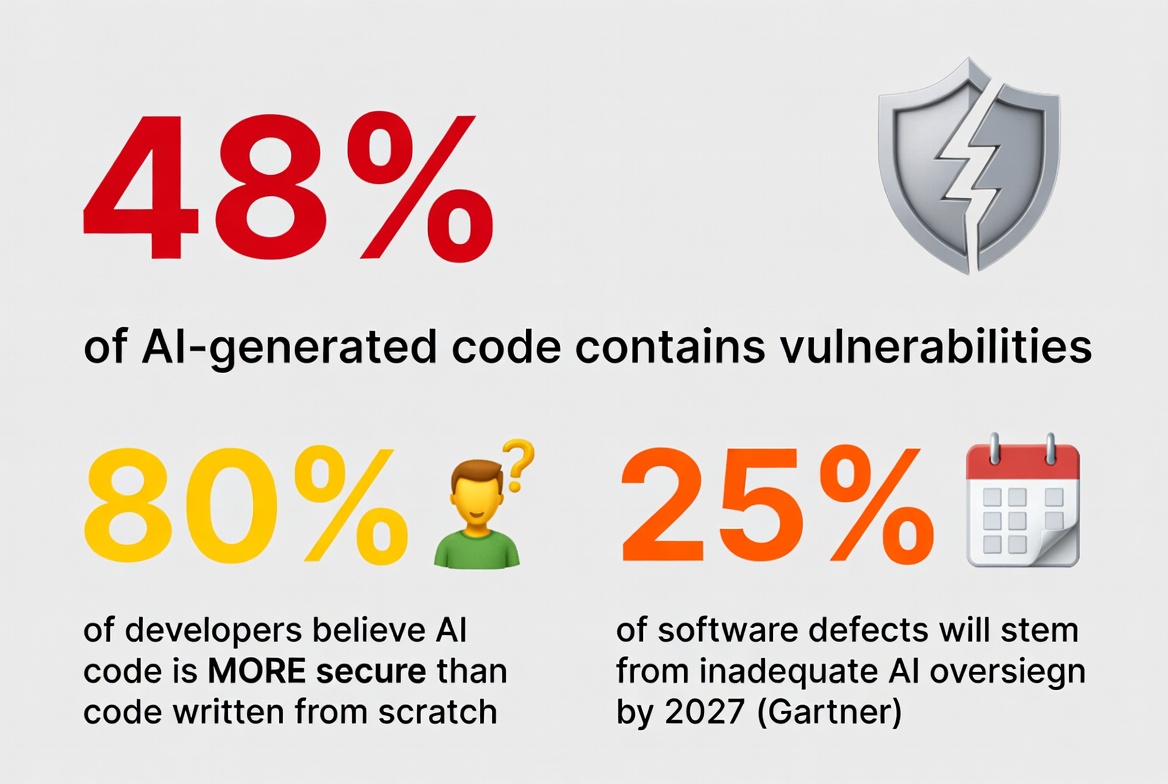

Before we get into the details, let's start with some numbers that should get your attention:

Let that sink in: Nearly half the code these tools generate has security problems, but most developers think it's more secure than what they'd write themselves. This is a recipe for disaster. Establish an AI code oversight KPI (for example, percentage of AI‑authored PRs passing security gates) to preempt this risk.

Training on Vulnerable Public Code — Here's the fundamental problem: AI coding assistants are trained on public code repositories—GitHub, Stack Overflow, open source projects, developer forums. They learn patterns from millions of lines of code written by thousands of developers with varying skill levels and security awareness. Much of the public code these models learn from contains vulnerabilities. Insecure patterns get copied, pasted, and propagated across projects. When an AI model trains on this data, it learns to reproduce these same vulnerable patterns. Think of it this way: Training a chef on random internet videos yields mixed results; likewise, AI reproduces what's common in its data, not what's secure.

Consider SQL injection—one of the oldest and most well-known vulnerabilities. Academic research found that AI assistants regularly suggest code like this:

python

This is textbook SQL injection vulnerability. Any attacker can manipulate the username variable to execute arbitrary SQL commands. Yet AI suggests this pattern because it appears frequently in public code. The secure version uses parameterized queries:

python

But if the vulnerable pattern appears more often in training data, the AI will suggest the vulnerable version first. AI models don't reason about security. They don't think "this could be exploited by an attacker." They pattern-match based on statistical likelihood. If insecure code is common in training data, the AI will reproduce it. This means every vulnerability that's common in public code—SQL injection, cross-site scripting (XSS), hardcoded credentials, missing input validation—gets learned and reproduced by AI assistants.

Guardrail: Enable IDE/CI security linting and PR checklists; require parameterized queries and secure-by-default snippets in templates and examples.

Code Without Security Intent — Even when AI generates syntactically correct code that appears to work, it often lacks critical security controls because the prompt didn't explicitly ask for them. AI-generated code frequently omits input validation (no checks on user input before processing), authentication checks (missing verification that users are who they claim to be), authorization checks (no validation that users have permission to access resources), output encoding (missing protection against XSS attacks), error handling (exposing sensitive information in error messages), rate limiting (no protection against abuse or denial-of-service), and secure defaults (using insecure configurations as defaults).

Imagine asking an AI to "create an API endpoint to update user profiles." The AI might generate:

javascript

This code works. It updates the database. But it has multiple security problems: no authentication check (anyone can update anyone's profile), no authorization check (users can change their own role to admin), no input validation (malicious data gets written directly to database), and it reflects potentially sensitive user data in response. A security-aware developer would catch these issues. But if a junior developer or someone working quickly copies this code and ships it, you've just introduced multiple critical vulnerabilities.

AI models optimize for completing the immediate task described in the prompt. If you ask for "an endpoint to update profiles," that's what you get—nothing more. Security controls are add-ons in the AI's view, not core requirements. Unless you explicitly prompt for security features, you won't get them. Here's a more secure version:

javascript

Guardrail: Bake security into prompts and PR templates (authN/authZ, validation, encoding); block merges without automated tests and static checks passing.

Deprecated and Vulnerable Dependencies — AI coding assistants not only generate vulnerable code logic—they also suggest vulnerable or outdated libraries and dependencies. When you ask an AI to "add JWT authentication," it might suggest:

javascript

Version 0.4.0 of jsonwebtoken has known vulnerabilities that were fixed in later versions. But if that version appeared frequently in the AI's training data (because it was popular when much of the training data was written), the AI might suggest it. The same applies to libraries with known CVEs (Common Vulnerabilities and Exposures), deprecated packages that are no longer maintained, packages with insecure default configurations, and libraries that have been replaced by more secure alternatives.

This creates a supply chain vulnerability problem. Your application becomes dependent on insecure third-party code, and attackers can exploit those dependencies even if your own logic is secure. Many organizations don't realize they've inherited vulnerabilities through AI-suggested dependencies until a security scan flags them—or worse, until they're exploited in production.

Guardrail: Enforce SCA in IDE/CI, pin minimal secure versions, allowlist approved libraries, automate upgrades (e.g., Renovate/Dependabot), and generate/monitor SBOMs in CI.

IP Contamination and Copyright Issues — Beyond security vulnerabilities, AI coding assistants pose intellectual property risks. AI models are trained on public code repositories, many of which contain copyrighted code under various open source licenses. Some licenses (like GPL) require derivative works to use the same license. Others (like MIT or Apache) have attribution requirements. When an AI generates code, it might reproduce substantial portions of licensed code verbatim without attribution, creating legal exposure: license violations (using GPL-licensed code in proprietary software), copyright infringement (reproducing copyrighted code without permission), and patent issues (implementing patented algorithms without licensing).

There's another concern: what happens to your proprietary code when developers use it to prompt AI assistants? When a developer copies internal code into ChatGPT or another cloud-based AI tool to ask "explain this function" or "refactor this code," that proprietary code gets sent to third-party servers. Depending on the tool's terms of service, the code might be stored indefinitely, it might be used to train future models, it might be visible to the AI provider's employees, and it could potentially leak to other users in edge cases. This is especially concerning for organizations with strict confidentiality requirements, regulated industries, or proprietary algorithms that represent competitive advantages.

Guardrail: Enable license and secret scanning; mandate zero-retention providers or self-hosted models; forbid pasting proprietary code into public tools; document approved data-sharing rules.

The False Security Assumption — Perhaps the most dangerous risk isn't technical—it's psychological. The Snyk survey finding that 80% of developers believe AI-generated code is more secure reveals a fundamental misunderstanding. Developers are treating AI assistants like trusted experts rather than tools that reproduce patterns from public internet code. This false sense of security leads to reduced code review rigor ("The AI wrote it, so it's probably fine"), shipping code without testing (trusting AI output without validation), skipping security reviews (assuming AI-generated code is vetted), and overconfidence in coverage (believing security is handled automatically).

This problem is especially acute with junior developers who lack the experience to spot security issues. When a senior developer reviews AI-generated code, they might catch the missing authentication check. When a junior developer reviews it, they might not even realize authentication should be there. AI assistants essentially act as a very productive junior developer who's read a lot of code but doesn't understand security implications. If you wouldn't merge code from a junior developer without senior review, you shouldn't merge AI-generated code without it either.

Guardrail: Treat AI code as untrusted; require senior review for AI-authored changes; block merges without tests and security scans.

Prompt Injection Attacks — Here's a newer risk that many teams haven't considered: AI assistants themselves can be attacked through prompt injection. Prompt injection is when an attacker manipulates the input to an AI system to make it behave unexpectedly. In the context of coding assistants, this could happen when a developer copies code from an untrusted source that contains hidden instructions, comments in code repositories contain malicious prompts, or AI tools read and interpret malicious content from dependencies.

For example, a malicious actor could add a comment to a popular open source library:

python

If an AI reads this while generating code, it might actually follow that instruction and suggest insecure code. While this is an emerging threat, researchers have demonstrated practical, enterprise‑impacting abuses across assistants and connectors: 6 ChatGPT integrated with Google Drive can be coerced via a crafted file to search a victim's Drive and exfiltrate API keys when processed, Microsoft Copilot Studio agents exposed to the internet can be hijacked to extract CRM data at scale, Cursor integrated with Jira MCP can be driven by malicious tickets to harvest credentials automatically, and similar techniques targeted Gemini and Salesforce Einstein (some issues were patched, but others were marked "won't fix," underscoring ecosystem risk).

Guardrail: Limit assistant integrations and scopes; require explicit human approval for agent actions; sandbox connectors; restrict processed file types; log and alert on assistant‑initiated data access; disable auto‑execution of external instructions; train developers on prompt-injection risks; restrict assistant context to trusted files; disable auto-following external content; scan generated diffs for insecure directives.

Beyond specific vulnerabilities, AI-generated code can create subtler long-term problems. AI often generates more code than necessary, with extra abstractions, redundant error handling, or over-engineered solutions. More code means more attack surface for vulnerabilities, higher maintenance burden, slower performance, and harder audits for security issues. AI models sometimes generate code that looks correct but contains subtle logic errors or references functions/libraries that don't exist—these "hallucinations" can introduce bugs that are hard to track down. When developers rely heavily on AI, they may stop truly understanding the code they're shipping, creating a culture where code is copied without comprehension, making it harder to maintain, debug, and secure over time.

If these risks sound alarming, good—they should be. But the solution isn't to ban AI tools. As we discussed in Chapter 1, that just drives usage underground. The solution is to acknowledge the risks (understand what can go wrong), set realistic expectations (AI code needs oversight), implement guardrails (technical controls and policies), train developers (build security awareness), and treat AI as a junior developer (all code needs review). We'll cover all of these in detail throughout this course.

In the previous chapter, we saw why developers love AI coding assistants. In this chapter, we've seen why security teams are terrified of them. The truth is, both perspectives are valid. AI tools offer real productivity gains and introduce real security risks. In the next chapter, we'll explore The Shadow AI Problem—what happens when you try to ban these tools, and why a prohibition-only approach doesn't work.

Before moving on, make sure you understand these key takeaways:

[1] arXiv (2024) – How secure is AI-generated Code: A Large-Scale Comparison of Large Language Models

[2] arXiv (2023) – Security Weaknesses of Copilot-Generated Code in GitHub Projects: An Empirical Study

[3] Snyk (2024) – 2024 Open Source Security Report: Slowing Progress and New Challenges for DevSecOps

[4] Gartner (2023) – Over 100 Data, Analytics and AI Predictions Through 2030

[5] IEEE Security & Privacy (2024) – State of the Art of the Security of Code Generated by LLMs: A Systematic Literature Review

[6] SecurityWeek (2024) – Major Enterprise AI Assistants Can Be Abused for Data Theft, Manipulation

Mark this chapter as finished to continue

Mark this chapter as finished to continue