The AI Coding Reality Check

Understanding the Security Risks

Here's an uncomfortable question: When an AI assistant generates code for you, who owns it?

You? The AI provider? The original developer whose code the AI learned from?

And here's an even more uncomfortable follow-up: What if the AI just reproduced copyrighted code verbatim, and you committed it to your codebase?

Welcome to one of the most legally murky and financially risky aspects of AI coding assistants: intellectual property and license contamination.

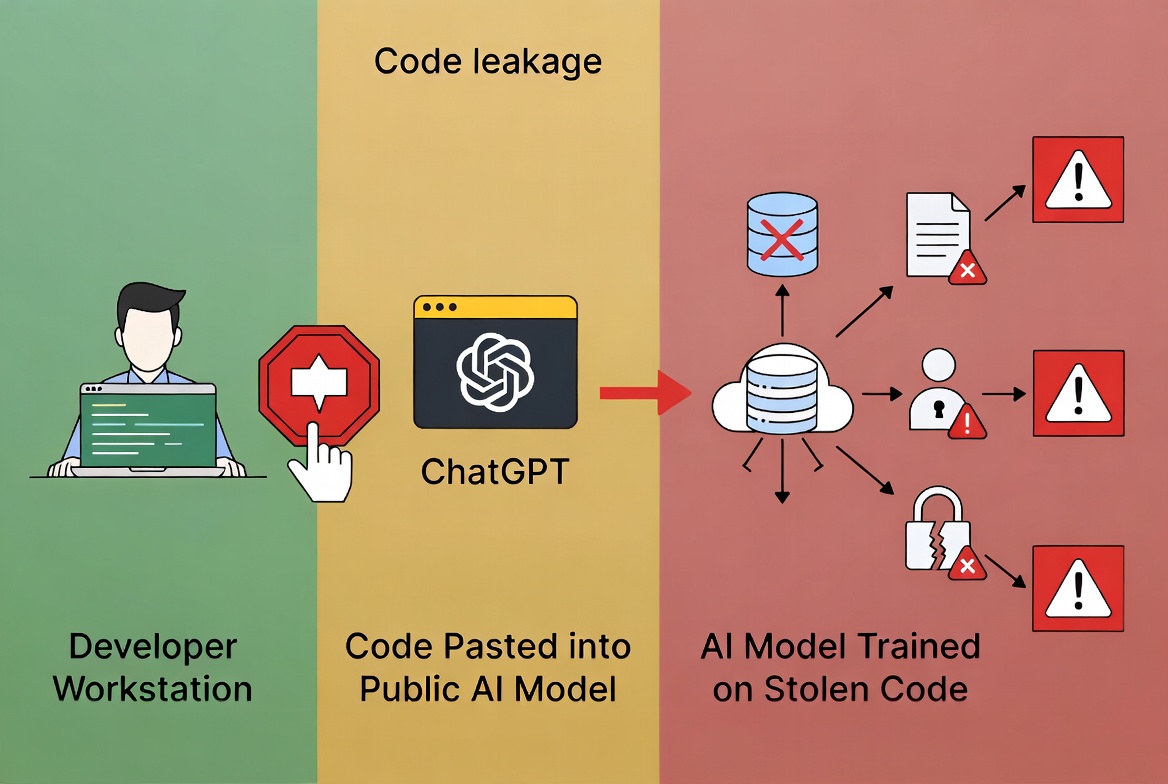

Unlike the other security risks we've covered, this one doesn't involve data breaches or exploits. Instead, it threatens your organization through:

The legal landscape is evolving rapidly, with landmark lawsuits and regulatory actions reshaping what's permissible. Let's understand the risks and how to protect your organization.

The intellectual property risks around AI-generated code aren't theoretical — they're materializing in courtrooms and boardrooms right now.

November 2024: A California judge refused to dismiss key claims in the GitHub Copilot lawsuit, allowing copyright infringement allegations to proceed. The judge found that plaintiffs plausibly alleged that Copilot could reproduce copyrighted code 1.

October 2024: The European Parliament approved new AI Act provisions requiring AI systems to disclose copyrighted content used in training, with fines up to €35 million or 7% of global revenue for violations 4.

September 2024: The U.S. Copyright Office issued guidance stating that AI-generated content may not be copyrightable if created without human creative input, raising questions about ownership of AI-assisted code 5.

2023: Getty Images filed a lawsuit against Stability AI for using millions of copyrighted images in training, establishing precedent that could extend to code 6.

MongoDB's Licensing Battle: In 2018, MongoDB changed its license from AGPL to SSPL (Server Side Public License) specifically to prevent cloud providers from using MongoDB in ways that didn't contribute back. The conflict shows how license violations can force business model changes 7.

Elastic vs. AWS: Elastic changed its license from Apache 2.0 to SSPL after AWS launched a competing service using Elasticsearch code without contributing back. The dispute resulted in AWS creating an OpenSearch fork 8.

Oracle vs. Google: The decade-long Java API copyright case resulted in billions in potential damages before the Supreme Court ruled for Google. It demonstrates the scale of IP litigation in software 9.

Now imagine: What if your AI assistant generated code that triggers similar disputes?

There are four distinct but interconnected IP risks when using AI coding assistants:

1. Code Memorization and Reproduction

AI models don't just learn patterns — they can memorize and reproduce exact code snippets from their training data.

How it happens:

Large language models are trained on billions of lines of code scraped from public repositories, Stack Overflow, documentation, and more. While they generally produce novel combinations, they can sometimes reproduce:

Research findings:

A 2023 study by New York University researchers found that GitHub Copilot reproduced code verbatim (matching at least 150 characters) from its training data approximately 1% of the time when generating longer code completions 10.

That might sound rare, but consider:

Example of memorization:

Training data (MIT licensed repository):

python

AI suggestion (nearly identical):

python

The magic constant 0x5f3759df and the algorithm structure are distinctive enough that this is clearly memorized, not independently derived. If the original was GPL-licensed and your codebase is proprietary, you've just introduced a license violation.

2. License Contamination

Open-source licenses come with requirements. Some are permissive (MIT, Apache), but others are copyleft — they require you to open-source any code that uses them.

The license spectrum:

| License Type | Examples | Key Requirement | Risk Level |

|---|---|---|---|

| Public Domain | Unlicense, CC0 | None — use freely | ✅ No risk |

| Permissive | MIT, BSD, Apache 2.0 | Attribution only | 🟡 Low risk |

| Weak Copyleft | LGPL, MPL | Library users unaffected, modifications must be shared | 🟡 Moderate risk |

| Strong Copyleft | GPL-2.0, GPL-3.0, AGPL-3.0 | Entire codebase must be open-sourced | 🔴 Critical risk |

| Commercial/Proprietary | Source-available, custom | Cannot use without license | 🔴 Legal risk |

The contamination problem:

If an AI assistant generates code that's substantially similar to GPL-licensed code, and you incorporate it into your proprietary application, you've potentially converted your entire codebase into a GPL-required open-source project.

Real-world scenario:

javascript

This isn't hypothetical. The GitHub Copilot lawsuit specifically alleges that Copilot reproduces GPL code without providing proper attribution or license compliance mechanisms 1.

3. Training Data Provenance

Where did the AI's training data come from? This question has enormous legal implications.

The problem:

GitHub Copilot's training data:

GitHub Copilot was trained on code in public repositories on GitHub 11. But:

The legal question:

Is AI training "fair use"? Courts haven't definitively answered this yet, but:

Why this matters for you:

Even if you didn't violate the license, if the AI provider did, you might still face legal exposure when you use the generated code. The lawsuit could:

4. IP Ownership of AI-Generated Code

Who owns code generated by AI? The answer is surprisingly unclear.

Competing claims:

U.S. Copyright Office guidance (2024):

"When an AI technology receives solely a prompt from a human and produces complex written material in response, the 'traditional elements of authorship' are determined and executed by the technology — not the human user."

This means AI-generated code might not have copyright protection at all, making it impossible to enforce exclusivity.

Practical implications:

Scenario 1: Competitor copies your AI-generated code

Scenario 2: AI provider claims rights

Scenario 3: Employee uses AI to generate code

Contract language gaps:

Traditional employment IP clauses say:

"Employee assigns to Company all rights to inventions and works created during employment."

But does this cover:

Employment contracts written before 2020 almost certainly don't address this.

Let's explore how IP contamination actually happens in practice.

Vector 1: Direct Reproduction of Copyrighted Code

The AI suggests code that's substantially similar to copyrighted source code.

As noted earlier under Code Memorization and Reproduction, models can directly reproduce distinctive implementations.

Detection is difficult:

Real case study:

A security researcher asked GitHub Copilot to implement a specific algorithm. It generated code nearly identical to a GPL-licensed implementation, including:

The researcher ran the code through a plagiarism detector and found 89% similarity to the GPL codebase 12.

Vector 2: Subtle Pattern Reproduction

The AI doesn't copy code verbatim but reproduces distinctive patterns, algorithms, or approaches that are protected.

Legal precedent:

In Oracle v. Google, the Supreme Court found that even without copying exact code, reproducing the structure and organization of an API could constitute infringement (though Google ultimately won on fair use grounds) 9.

Example:

python

AI suggestion (for a proprietary codebase):

python

Variable names changed, method names changed, but the structure, approach, and distinctive priority-handling mechanism are identical. A court might find this is a derivative work.

Vector 3: License Laundering Through AI

Developers intentionally or unintentionally use AI to "launder" GPL code into proprietary codebases.

How it works:

Why this doesn't work:

Real scenario:

text

This is legally equivalent to manually rewriting GPL code. The derivative work is still covered by the original license.

Vector 4: Transitive License Contamination

AI suggests code that depends on or links to GPL libraries, contaminating your codebase through dependencies.

Example:

python

Even though your code is original, using the GPL library taints your entire application under most interpretations of GPL 13.

Vector 5: False Sense of Security

Developers assume that because AI generated it, it must be original and safe to use.

Dangerous assumptions:

Reality check:

The legal questions around AI-generated code are being litigated right now. Here's the current state:

Filed: November 2022

Status: Active as of 2024

Key allegations:

Significance:

This lawsuit could establish whether:

November 2024 ruling:

Judge Jon Tigar allowed copyright infringement claims to proceed, finding that plaintiffs plausibly alleged that Copilot output could be "substantially similar" to training data 1.

Filed: February 2023

Status: Active

Issue: AI training on copyrighted images

While focused on images, this case establishes precedent for whether AI training constitutes infringement. If Getty wins, the reasoning could extend to code 6.

Filed: December 2023

Status: Active

Issue: Training AI on copyrighted news articles

The Times alleges OpenAI's models reproduce copyrighted content verbatim. This directly addresses the memorization issue we see with code 14.

Key provisions affecting code generation:

Article 53: Transparency obligations for general-purpose AI

Article 4: Copyright compliance

Penalties:

Impact on AI coding tools:

Providers must disclose:

This could force providers to:

Identifying license violations and IP contamination requires a multi-layered approach.

1. Code Similarity Detection Tools

ScanCode Toolkit — Open-source license and copyright detector

bash

Fossology — License compliance software

Black Duck / Synopsis — Commercial code scanning

2. Code Clone Detection

CloneDR — Detects code clones even with variable renaming

python

NiCad — Near-miss clone detector

3. AI-Generated Code Detection

GPTZero and DetectGPT — Identify AI-generated text

While designed for natural language, these tools can sometimes identify AI-generated code based on statistical patterns.

GitHub Copilot Detection:

Research tools can identify code likely generated by Copilot based on:

4. License Compatibility Analysis

FOSSA — Automated license compliance

yaml

Automatically flags when:

5. Training Data Attribution (Emerging)

DataProvenance.org — Tracks AI training data sources

New initiatives are creating databases of:

AI Provider Transparency Tools:

Some providers are beginning to offer:

GitHub announced in 2023 that they're working on a feature to flag Copilot suggestions that match public code 15, though it's not yet widely available.

Here's how to use AI coding assistants while minimizing legal risk.

1. Establish Clear AI Usage Policies

Required policy elements:

markdown

2. Use Enterprise Tiers with IP Indemnification

When evaluating AI coding assistant providers, look for these critical contractual protections:

IP Indemnification:

Reference Tracking:

Training Data Separation:

Contract checklist:

markdown

3. Implement Automated License Scanning

Pre-commit hooks:

bash

CI/CD integration:

yaml

4. Mark All AI-Generated Code

Convention: Add AI attribution comments

python

Benefits:

Automated tagging:

javascript

5. Conduct Regular IP Audits

Quarterly review process:

Step 1: Identify AI-generated code

bash

Step 2: Run license scans

bash

Step 3: Code similarity check

bash

Step 4: Legal review

6. Educate Developers on License Risk

Training curriculum:

Module 1: IP Basics

Module 2: AI-Specific Risks

Module 3: Practical Guidelines

Ongoing reminders:

markdown

7. Consider Self-Hosted AI Models

Benefits of on-premise models:

Options:

StarCoder / StarCoder2 — Open-source code generation models

Code Llama — Meta's code-specialized LLM

Tabby — Self-hosted AI coding assistant

Cost-benefit analysis:

| Factor | Cloud AI | Self-Hosted AI |

|---|---|---|

| Setup cost | Low ($20-60/user/month) | High ($50K-500K infra + ML talent) |

| Ongoing cost | Predictable subscription | Variable (compute, maintenance) |

| IP control | Limited (trust provider) | Complete (your infrastructure) |

| Data security | Depends on provider | Full control |

| Model quality | Excellent (latest models) | Good (may lag behind) |

| Compliance | Vendor-dependent | You control |

For enterprises with strict IP requirements (defense, finance, healthcare), self-hosted is often worth the investment.

8. Implement "Clean Room" Review Process

For critical code, use a two-person clean room process:

Process:

This process helps detect when AI has reproduced something too specific to be independently derivable.

AI providers have a responsibility to help prevent IP contamination. Here's what organizations should expect and demand from their providers:

Baseline requirement: Providers should implement code referencing features that:

Example implementation:

text

Rationale: Without attribution, developers unknowingly accept copyrighted code. Reference tracking puts the decision-making power back in human hands.

Baseline requirement: Providers should offer configuration options to:

Rationale: Different organizations have different license compatibility requirements. A provider that only offers "all or nothing" puts organizations at risk when they need to avoid copyleft licenses.

Ideal future state:

json

This would let organizations make informed decisions about risk.

Before moving to the next chapter, make sure you understand:

The bottom line: Using AI coding assistants without IP protection is like accepting code contributions from anonymous internet strangers without reviewing the license. You wouldn't do that manually — don't do it with AI.

[1] Courthouse News Service (2024) – Judge trims code-scraping suit against Microsoft, GitHub

[2] BlackDuck (2025) – Open Source Security and Risk Analysis Report

[3] Open Source Initiative – Licenses by Name

[4] European Parliament (2024) – EU AI Act: First regulation on artificial intelligence

[5] U.S. Copyright Office (2024) – Copyright Registration Guidance: Works Containing Material Generated by Artificial Intelligence

[6] The Verge (2023) – Getty Images sues AI art generator Stable Diffusion

[7] MongoDB FAQ (2018) – Server Side Public License FAQ

[8] Elastic Blog (2018) – Doubling down on open

[9] Supreme Court of the United States (2021) – Google LLC v. Oracle America, Inc.

[10] NYU Research (2023) – Memorization in Large Language Models

[11] GitHub – GitHub Copilot: How it works

[12] SoftwareOne (2023) – GitHub Copilot and Open Source License Compliance

[13] Free Software Foundation – GPL FAQ: Does the GPL require that source code of modified versions be posted to the public?

[14] New York Times (2023) – The Times Sues OpenAI and Microsoft Over A.I. Use of Copyrighted Work

[15] GitHub Blog (2023) – Introducing code referencing for GitHub Copilot

[16] GitHub – GitHub Copilot Trust Center

[17] AWS – Amazon CodeWhisperer: Reference Tracker

Mark this chapter as finished to continue

Mark this chapter as finished to continue