The AI Coding Reality Check

Understanding the Security Risks

Here's the narrative so far: AI coding tools are incredibly popular (Chapter 1), they introduce serious security risks (Chapter 2), and banning them doesn't work (Chapter 3). So the path forward is managed adoption with proper guardrails.

But there's a crucial question we haven't fully addressed: Do AI coding tools actually make developers more productive?

The answer is more nuanced than most people realize. The truth is both yes and no — it depends on the task, the developer, and how the tool is used.

Welcome to the productivity paradox.

If you've read vendor marketing materials or early adopter testimonials, you've probably seen claims like:

Some of these claims are based on real research. Others are based on selective metrics or self-reported data that doesn't hold up under scrutiny.

Let's look at what the actual research shows.

A McKinsey study found that developers could complete tasks up to twice as fast with generative AI, with documenting code functionality completed in half the time, writing new code in nearly half the time, and optimizing existing code in nearly two-thirds the time. AI excels at writing boilerplate code, creating documentation, code refactoring, translating code between languages, and setting up standard functionality. However, time savings can vary significantly based on task complexity and developer experience. Time savings shrank to less than 10 percent on tasks that developers deemed high in complexity. For developers with less than a year of experience, tasks took 7 to 10 percent longer with AI tools than without them in some cases. Why? Because junior developers spent time reviewing and correcting AI suggestions, complex tasks required developer expertise to validate outputs, AI tools provided incorrect coding recommendations and even introduced errors in the code at times, and developers had to "spoon-feed" the tool to debug code correctly.

A field experiment with 758 consultants at Boston Consulting Group examined AI performance on realistic, complex, and knowledge-intensive tasks, testing tasks both within and outside the "jagged technological frontier" of AI capabilities. Inside the frontier (tasks within AI capabilities), consultants using AI completed 12.2% more tasks on average, completed tasks 25.1% more quickly, and produced significantly higher quality results (more than 40% higher quality compared to a control group). Outside the frontier (tasks beyond AI capabilities), consultants using AI were 19 percentage points less likely to produce correct solutions compared to those without AI. This reveals a critical insight: AI capabilities are powerful but uneven. Some tasks that appear simple prove difficult for AI, while others that seem complex are handled well.

GitHub conducted rigorous research with professional developers. In surveys, developers reported that GitHub Copilot helped them complete tasks faster, especially repetitive ones, with over 90% agreement. Between 60-75% of users reported feeling more fulfilled with their job, less frustrated when coding, and able to focus on more satisfying work. In a controlled experiment with 95 professional developers, the group using GitHub Copilot completed an HTTP server implementation task 55.8% faster than the control group (with a 95% confidence interval of 21-89% improvement). Important context: the treated group's success rate was 7 percentage points higher than the control group, but this estimate was not statistically significant. The research focused on specific, well-defined coding tasks, and long-term maintenance costs and debugging time weren't fully accounted for.

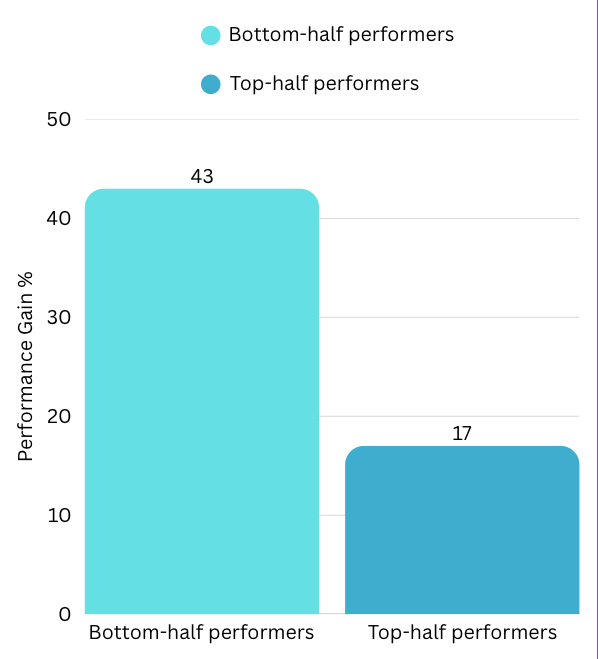

Experience level matters significantly. Less experienced developers, developers with heavy coding loads (more hours of coding per day), and older developers (aged 25-44) benefited more from Copilot. When comparing performance improvements, bottom-half-skill performers exhibited a 43% surge in performance, compared to 17% for top-half-skill performers when leveraging AI.

Performance Gains by Skill Level

Performance Gains by Skill Level

There's a concerning gap between perception and reality. While participants using GitHub Copilot estimated a 35% increase in productivity on average, the actual revealed productivity increase was 55.8%, suggesting developers underestimated rather than overestimated the gains. However, other research shows the opposite pattern where developers overestimate gains, particularly when measuring overall project velocity rather than specific tasks, including complex work beyond AI's capabilities, or accounting for downstream costs like debugging and refactoring.

Based on the research, AI coding assistants genuinely boost productivity in specific scenarios:

✅ Boilerplate and Repetitive Code

Generative AI can handle routine tasks such as auto-filling standard functions used in coding, completing coding statements as the developer is typing, and documenting code functionality in a given standard format.

Use cases:

Why it works: These are pattern-matching tasks with minimal security or business logic complexity.

✅ Accelerating Updates to Existing Code

When using AI tools with effective prompting, developers could make more changes to existing code faster. For instance, to adapt code from an online coding library, developers would copy and paste it into a prompt and submit iterative queries requesting the tool to adjust based on their criteria.

✅ Learning Unfamiliar Technologies

The technology can help developers rapidly brush up on an unfamiliar code base, language, or framework. When developers face a new challenge, they can turn to these tools to get help they might otherwise seek from an experienced colleague—explaining new concepts, synthesizing information, and providing step-by-step guides.

Why it works: AI accelerates the learning curve by providing context-specific examples and explanations.

✅ Writing Tests

With the right upskilling and enterprise enablers, generative AI tools can help with code refactoring, which can enable leaders to make a dent in traditionally resource-intensive modernization efforts.

Use cases:

✅ Documentation Generation

Documenting code functionality for maintainability can be completed in half the time with generative AI tools.

Why it works: AI can infer intent from code structure and generate readable explanations.

✅ Junior Developer Acceleration

Consultants across the skills distribution benefited significantly from having AI augmentation, with those below the average performance threshold increasing by 43% and those above increasing by 17%.

Why it works: Junior developers benefit most from having an "always available" assistant to answer questions and suggest approaches.

There are scenarios where AI coding assistants make things worse:

❌ Security-Critical Code

Research participants reported that AI tools won't know the specific needs of a given project and organization. Such knowledge is vital when coding to ensure the final software product can seamlessly integrate with other applications, meet a company's performance and security requirements, and ultimately solve end-user needs.

Why it fails:

Example: Authentication, authorization, cryptography, payment processing, access control

❌ Complex Business Logic

AI-based tools are better suited for answering simple prompts, such as optimizing a code snippet, than complicated ones, like combining multiple frameworks with disparate code logic. To obtain a usable solution to satisfy a multifaceted requirement, developers first had to either combine the components manually or break up the code into smaller segments.

Why it fails:

❌ Tasks Requiring Human Oversight

Participant feedback signaled three areas where human oversight and involvement were crucial: examining code for bugs and errors, contributing organizational context, and navigating tricky coding requirements.

❌ Novel Architecture or Design

Why it fails:

Even when AI speeds up initial coding, there are hidden costs. Using AI tools did not sacrifice quality for speed when the developer and tool collaborated—code quality in relation to bugs, maintainability, and readability was marginally better in AI-assisted code. However, participant feedback indicates that developers actively iterated with the tools to achieve that quality. This highlights an important point: quality is maintained only when developers actively engage with and refine AI outputs. While subjects using AI produce ideas of higher quality, there is a marked reduction in the variability of these ideas compared to those not using AI, suggesting that while AI aids in generating superior content, it might lead to more homogenized outputs and less innovation and creative diversity in solutions.

An immediate danger emerging from these findings is that people will stop delegating work inside the frontier to junior workers, creating long-term training deficits. When AI writes significant portions of code, developers may not fully understand what they committed, onboarding new team members is harder, and debugging is slower because no one knows how it works.

Based on comprehensive research, here are realistic productivity expectations:

Best Case Scenario — Well-defined, routine tasks within AI's frontier:

Mixed Workload Scenario — Combination of simple and complex tasks:

Problematic Scenario — Complex, outside-the-frontier work:

Instead of asking "Did AI make us faster?", focus on outcomes rather than activity. Maximizing productivity gains and minimizing risks when deploying generative AI-based tools will require engineering leaders to take a structured approach that encompasses generative AI training and coaching, use case selection, workforce upskilling, and risk controls.

Track these key metrics: task completion rate (percentage of tasks successfully completed), quality metrics (bug rates, security vulnerabilities, code maintainability), time to value (end-to-end delivery time, not just coding time), and developer experience (developers using generative AI-based tools were more than twice as likely to report overall happiness, fulfillment, and a state of flow).

Two predominant models emerged for effective AI collaboration: "Centaur" behavior (strategic division of labor between humans and AI) and "Cyborg" behavior (intricate integration where humans and AI work closely together at the subtask level). Centaurs switch between AI and human tasks based on strengths of each. Cyborgs integrate AI throughout their workflow with continuous interaction. Both approaches can be effective when used strategically.

Based on the research findings, organizations should take a comprehensive approach. Provide training and coaching by including best practices and hands-on exercises for inputting natural-language prompts into the tools (prompt engineering). Workshops should equip developers with an overview of generative AI risks and best practices in reviewing AI-assisted code. For developers with less than a year of experience, research suggests a need for additional coursework in foundational programming principles to achieve the productivity gains observed among those with more experience.

Pursue the right use cases. While there is tremendous industry buzz around generative AI's ability to generate new code, research shows that the technology can have impact across many common developer tasks, including refactoring existing code. Using multiple tools can be more advantageous than just one. Participants indicated that conversational AI excelled at answering questions when refactoring code, while code-specific tools excelled at writing new code. When developers used both tools within a given task, they realized an additional time improvement of 1.5 to 2.5 times.

Manage new risks. New data, intellectual-property, and regulatory risks are emerging with generative AI-based tools. Leaders should consider potential risks such as data privacy and third-party security, legal and regulatory changes, AI behavioral vulnerabilities, ethics and reputational issues, and security vulnerabilities that can crop up in AI-generated code.

Plan for skill shifts. As developers' productivity increases, leaders will need to be prepared to shift staff to higher-value tasks. Baselining productivity and continuously measuring improvement can reveal new capacity as it emerges across the organization.

The productivity paradox resolves when you understand this:

AI coding assistants are powerful tools for specific tasks within their frontier, not universal productivity multipliers.

Generative AI is poised to transform software development in a way that no other tooling or process improvement has done. Using today's class of generative AI-based tools, developers can complete tasks up to two times faster—and this is just the beginning. But tooling alone is not enough to unlock the technology's full potential.

The organizations seeing real productivity gains are those who:

We've now covered the full context: why developers love AI (real productivity benefits exist for specific tasks in Chapter 1), the security risks (AI-generated code can introduce vulnerabilities in Chapter 2), why bans fail (Shadow AI makes things worse in Chapter 3), and the productivity truth (it helps within its frontier, hurts outside it in this chapter). In the next chapter, we'll shift from problems to solutions: When AI Helps vs. When It Hurts—a practical framework for deciding when to use AI coding assistants and when to avoid them.

Before moving on, make sure you understand these key takeaways:

[1] McKinsey & Company (2023) – Unleashing developer productivity with generative AI

[2] Dell'Acqua, F., et al. (2023) – Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality, Harvard Business School Working Paper 24-013

[3] Kalliamvakou, E. (2022) – Research: quantifying GitHub Copilot's impact on developer productivity and happiness, GitHub Blog

[4] Peng, S., Kalliamvakou, E., Cihon, P., & Demirer, M. (2023) – The Impact of AI on Developer Productivity: Evidence from GitHub Copilot, arXiv:2302.06590

[5] GitLab (2024) – How AI helps DevSecOps teams improve productivity

Mark this chapter as finished to continue

Mark this chapter as finished to continue