The AI Coding Reality Check

Understanding the Security Risks

In the previous chapters, we've explored specific vulnerabilities: data leakage, insecure code patterns, supply chain risks, and IP contamination. But there's a meta-problem that makes all of these worse: developers trust AI-generated code more than they should.

This chapter examines the psychological and organizational factors that create a false sense of security around AI coding assistants, why this trust is misplaced, and how it leads to real security incidents.

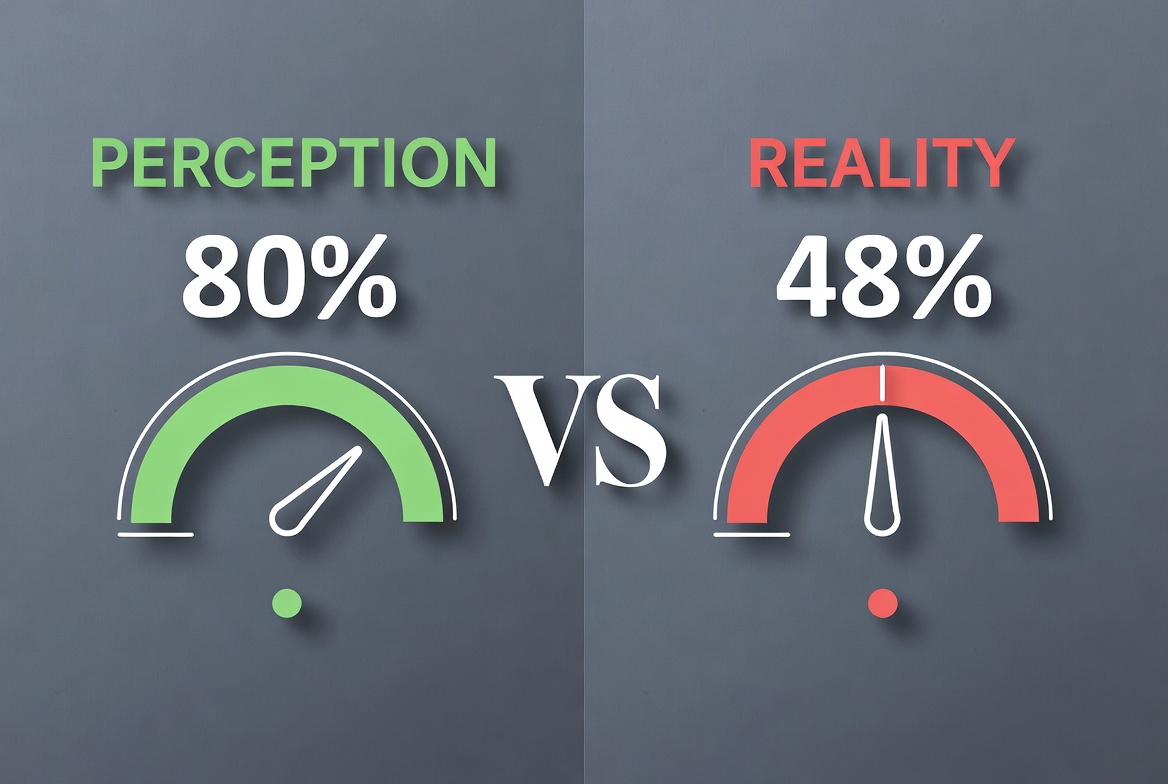

Here's the uncomfortable reality: AI coding assistants simultaneously make developers feel more confident about security while producing code that is less secure.

Research shows a clear pattern:

This creates a perfect storm: vulnerable code being written faster, reviewed less carefully, and shipped with more confidence than it deserves.

Understanding why developers trust AI code too much is essential to building effective countermeasures.

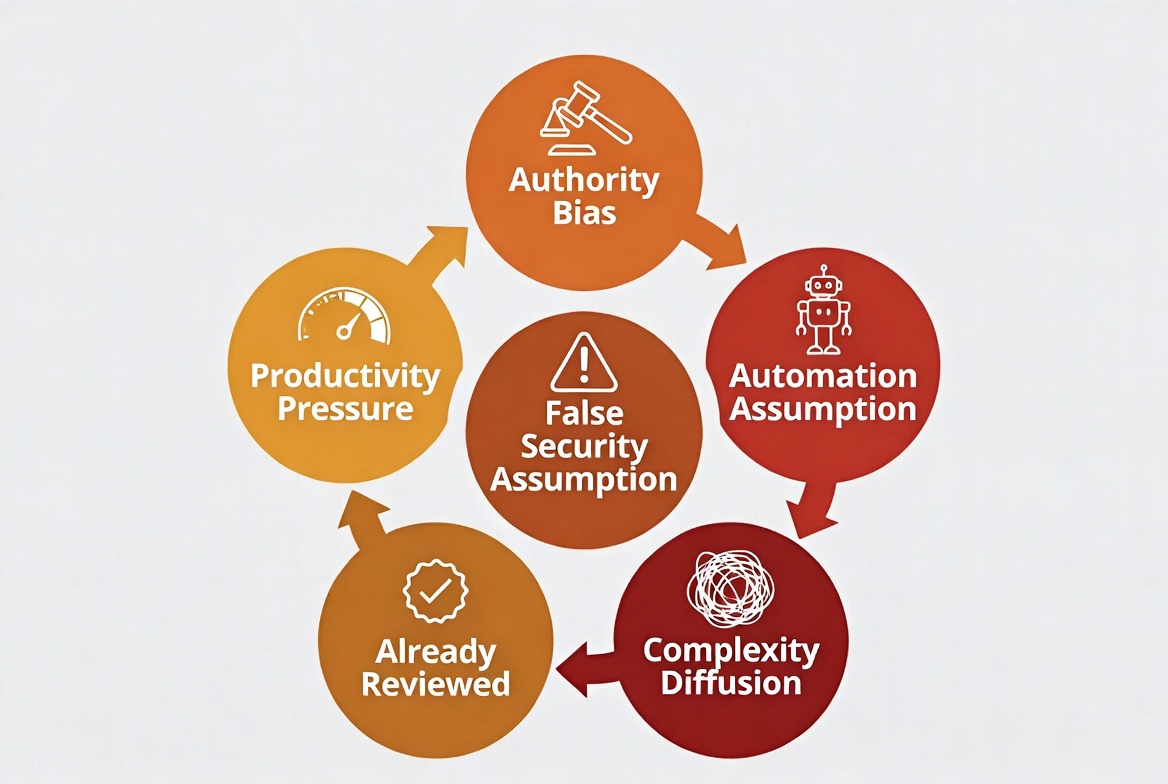

1. The Authority Bias

When AI generates code, it presents it with confidence and structure. There's no "I think" or "maybe try" — just clean, formatted code that looks professional. This triggers our instinct to trust authoritative sources.

Humans tend to trust authority figures and systems that present information confidently. AI coding assistants present code with the same authority as official documentation or senior engineer recommendations.

Real-world manifestation:

python

Why it's trusted: Professional docstring, clear logic flow, uses standard libraries. It looks authoritative.

Why it's wrong: SQL injection vulnerability, MD5 is cryptographically broken, no rate limiting, no timing attack protection.

A human reviewer might catch these issues in human-written code, but the "AI generated this" label creates an authority halo that reduces scrutiny.

2. The Automation Assumption

When we automate processes, we unconsciously assume they include quality checks. When GPS calculates a route, we assume it checked for traffic, road closures, and efficiency. When spell-check underlines something, we assume it analyzed grammar rules.

Decades of using automated tools that do include built-in quality checks have trained us to trust automation. We assume AI coding assistants perform security analysis as part of code generation.

The reality: AI coding assistants are generative, not evaluative. They generate code based on patterns, but they don't evaluate it for security, correctness, or quality. There's no "security check" happening before the code is presented to you.

Real-world manifestation:

A developer asks Copilot: "Create an API endpoint to upload user profile images."

Copilot generates:

javascript

What developers assume happened: The AI considered security implications, file type restrictions, path traversal risks, and size limits.

What actually happened: The AI pattern-matched against thousands of upload examples and generated statistically likely code. No security evaluation occurred.

What's wrong: Path traversal vulnerability (can overwrite system files), no file type validation (can upload malicious executables), no size limits (DoS risk), no authentication check.

3. The Complexity Diffusion Effect

When something is complex and we don't fully understand it, we attribute capabilities to it beyond what it actually has. AI models are complex black boxes, which leads developers to assume they possess understanding they don't have.

When we can't explain how something works, we tend to overestimate its capabilities. "If it can generate this complex code, surely it understands security implications."

The reality: AI models use statistical pattern matching at an enormous scale. They don't "understand" security in the way humans do. They can't reason about threat models, attack vectors, or security boundaries.

Real-world example:

A developer prompts: "Write a password reset function."

AI generates sophisticated code with email verification, token generation, expiration handling, database updates — all looking very professional and complete.

What developers infer: "This AI clearly understands password reset flows, so it must have considered security best practices."

What developers miss:

Math.random())The complexity of the generated code creates an illusion of security expertise that isn't there.

4. The "Already Reviewed" Perception

When code arrives polished and formatted, developers unconsciously treat it as if it's been through an initial review. It feels like receiving code from a colleague rather than writing from scratch.

We use heuristics to allocate mental effort. Well-formatted, syntactically correct code feels "later stage" in the development process, triggering less thorough review.

Research evidence: Studies show code reviewers spend significantly less time reviewing AI-generated code compared to human-written code, even when told the source 3. The polished presentation signals "this is further along" and reviewers shift to surface-level checks.

Real-world manifestation:

Scenario A — Human-written rough code:

python

Reviewer thinking: "This needs work. What about validation? Error handling? Authorization? Is this SQL injection safe? Let me check the ORM docs..."

Scenario B — AI-generated polished code:

python

Reviewer thinking: "This looks good. Type hints, docstring, error handling, logging. Looks like someone put thought into this. Approved."

What the reviewer missed: No authorization check (anyone can fetch any user's data), error logging might expose sensitive information, broad exception catching hides issues, no input validation.

The professional formatting and structure created a false sense that the code had already been carefully considered.

5. The Productivity Pressure

When organizations measure developer velocity — story points completed, features shipped, commits per week — and AI tools demonstrably increase these metrics, there's organizational pressure to accept AI output with minimal friction.

Questioning AI output slows you down. If your peer is shipping features 30% faster with AI and you're spending extra time scrutinizing every AI suggestion, you feel pressure to "keep up."

Organizational dynamics:

Real-world manifestation:

Two developers on the same team:

Developer A (cautious):

Developer B (fast-moving):

Sprint retrospective:

This creates a race-to-the-bottom dynamic where thorough security review is penalized by velocity metrics.

Let's examine how the false security assumption manifests in real development situations.

Scenario 1: The "Secure by AI" Authentication System

A startup needs to implement user authentication. The team lead prompts their AI assistant: "Create a secure authentication system with JWT tokens, password hashing, and session management."

The AI generates a comprehensive authentication system with hundreds of lines of code including user registration, login, token generation, middleware, and database schemas. It looks professional and complete.

What developers see:

What developers think: "The AI generated a secure, production-ready authentication system. It's using bcrypt, JWTs, proper structure — all the secure patterns I've heard about."

What reviewers think: "This looks comprehensive. The AI clearly knows authentication patterns. The code structure is clean and it's using bcrypt which I know is secure. Approved."

What they missed:

javascript

Security issues:

Math.random() is predictable, enables account takeoverOutcome: The team ships this authentication system. Three months later, a security audit finds:

Root cause: The appearance of security (bcrypt, JWT, middleware) created false confidence. The code looked secure, so developers assumed it was secure.

Scenario 2: The "AI-Reviewed" API Endpoint

A developer needs to create an API endpoint for data export. The team has a policy: "All code must be reviewed for security." But what does that mean for AI-generated code?

The prompt:

text

AI generates:

javascript

Developer's review process:

Developer's conclusion: "This looks good. Authentication is checked, the logic is clear, headers are correct. The AI did a good job."

PR review:

What both missed:

SQL Injection — ${table} allows arbitrary SQL execution

javascript

No Authorization — Users can export any table, including other users' data

javascript

Path Traversal in filename header — Can overwrite system files

javascript

No data sanitization — CSV can contain formulas that execute on open (CSV injection)

No rate limiting — Can be used for DoS

No audit logging — Data exports aren't logged

Outcome: Security researcher discovers the endpoint, exports entire user database including hashed passwords, emails, and PII. GDPR violation, regulatory fine, reputational damage.

Root cause: Both the developer and reviewer assumed "AI generated + authentication check = secure enough." The false security assumption caused both layers of review to fail.

Scenario 3: The "Best Practices" Configuration

A team is setting up a new microservice. The tech lead asks AI: "Generate a production-ready Docker configuration with security best practices."

AI generates a comprehensive setup:

Dockerfile with multi-stage builddocker-compose.yml with service definitionsWhat the team sees:

dockerfile

Team's assessment:

Security team's assessment: "This follows Docker best practices. Multi-stage builds, slim images, production dependencies. Approved."

What everyone missed:

dockerfile

Actual security issues:

Running as root — Container has full system privileges

dockerfile

No integrity verification — Packages can be tampered with

dockerfile

No security updates — Base image has known CVEs

dockerfile

No read-only filesystem — Application can modify its own code

Exposed package files — package.json reveals dependency versions to attackers

No resource limits — Container can consume all host resources

No network policies — Can connect anywhere

Outcome: Six months later, a dependency vulnerability is exploited. Because the container runs as root, the attacker gains root access to the host system. Lateral movement leads to data breach affecting multiple services.

Root cause: The AI-generated configuration looked like it followed best practices (multi-stage build, slim image) and included professional touches (health checks, logging). This created false confidence that security was handled.

The false security assumption doesn't just affect individual developers — it scales to organizational culture and process.

1. "AI-Powered Security" Marketing

When vendors market AI coding assistants with security features ("trained on secure code," "security-aware suggestions," "detects vulnerabilities"), organizations internalize these messages and reduce other security controls.

Example messaging:

Organizational response:

Reality: The AI has no formal security verification. Marketing claims are aspirational, not measured guarantees.

2. Metrics-Driven Pressure

When leadership measures success by:

And AI tools measurably improve these metrics, there's organizational pressure to maximize AI usage and minimize friction (like thorough security review).

The dynamic:

text

3. Diffusion of Responsibility

In traditional development, responsibility is clear:

With AI in the mix, responsibility becomes murky:

Real incident:

A critical SQL injection reached production. Post-incident review:

Everyone points to someone else. The root cause — accepting AI output without adequate scrutiny — isn't addressed because no one feels directly responsible.

The false security assumption creates a multiplicative risk effect:

text

If developers introduce vulnerabilities 10% of the time and reviews catch 90% of them:

text

text

If AI increases vulnerabilities to 40% and false security reduces review effectiveness to 50%:

text

The risk increased 20x.

This compounds over time:

Within six months, the majority of your codebase contains AI-generated vulnerabilities that passed review due to false security assumptions.

Addressing this requires both individual habits and organizational changes:

1. Explicit Trust Boundaries

Create a mental framework: "AI-generated code starts at zero trust, not neutral."

Before accepting AI suggestions, ask:

2. Security-Focused Code Review Checklist

When reviewing AI-generated code (your own or others'), explicitly check:

markdown

3. The "Explain Back" Technique

Before accepting AI-generated security-critical code, explain back to yourself (or out loud) why it's secure:

"This password reset function is secure because: [explain the security properties]"

If you can't confidently explain why it's secure, don't accept it.

4. Intentional Friction

Add deliberate pauses before accepting AI suggestions for security-critical code:

This breaks the "flow state" acceptance pattern where you quickly accept suggestions without deep thought.

1. Explicit AI Code Marking

Require developers to mark AI-generated code in commits:

git

This:

2. Differentiated Review Requirements

Establish different review requirements for AI-assisted code:

| Code Type | Human-Written | AI-Assisted |

|---|---|---|

| Boilerplate/tests | Standard review | Standard review |

| Business logic | Senior developer review | Senior developer + security review |

| Auth/crypto | Security team review | Security team review + penetration test |

| Data access | Senior developer review | Senior + Security review + data privacy review |

3. Enhanced Security Gates for AI Code

Add specific security checks for AI-assisted code:

yaml

4. Regular "AI Assumption" Training

Quarterly training sessions that:

5. Metrics That Tell the Truth

Track metrics that reveal false security assumptions:

text

If your metrics show:

You have a false security problem.

Company: Mid-size SaaS company, 50 developers

Problem: After adopting GitHub Copilot, velocity increased 25% but production security incidents increased 400%

Phase 1: Visibility (Month 1)

Findings:

Phase 2: Calibration (Months 2-3)

Phase 3: Culture Shift (Months 4-6)

Key insight: The fix wasn't "stop using AI" — it was "stop assuming AI means secure."

Before moving to the next chapter, make sure you understand:

[1] Snyk Podcast (2025) – The AI Security Report

[2] arXiv (2023) – Do Users Write More Insecure Code with AI Assistants?

[3] ScienceDirect (2021) – Can AI artifacts influence human cognition? The effects of artificial autonomy in intelligent personal assistants

Mark this chapter as finished to continue

Mark this chapter as finished to continue