The AI Coding Reality Check

Understanding the Security Risks

So far, we've covered why developers love AI coding assistants (Chapter 1) and the serious security risks they introduce (Chapter 2). If you're a security leader or engineering manager, your first instinct might be: "We should just ban these tools."

It's a reasonable reaction. Studies show AI assistance can increase the likelihood that insecure code appears in submissions and reviews. If a meaningful share of AI-generated suggestions contain vulnerabilities—and many developers don't spot them—why not just prohibit their use? 2 3

Here's the uncomfortable truth: banning AI tools doesn't work. In fact, it often makes the problem worse.

When organizations ban AI coding assistants, developers don't stop using them — they just stop telling you about it. This is called Shadow AI: the unauthorized use of AI systems outside official channels, without security oversight, and often in violation of company policy. 10 Unlike traditional shadow IT, which involves any unsanctioned software or hardware, shadow AI specifically refers to AI tools, platforms, and applications—introducing unique concerns around data management, model outputs, and decision-making. 10

Let's be honest about why developers ignore bans. Productivity pressure is real—developers are measured on velocity, features shipped, and bugs closed. If a tool makes them 2x faster on certain tasks, they'll use it regardless of policy. 11 The tools themselves are everywhere: ChatGPT is a free website, GitHub Copilot has a personal tier, Cursor is a download away. Unlike enterprise software that requires procurement and IT setup, AI assistants are consumer-grade tools that anyone can access in minutes. 10 Enforcement is nearly impossible—how do you detect if a developer is using ChatGPT in another browser tab, running Copilot on their personal laptop, or asking Claude to review code during their lunch break? 10 Peer effects compound the problem: when developers see colleagues shipping faster with AI assistance, they feel pressure to use the same tools or fall behind. 11 With up to 96% of enterprise employees now using generative AI applications and 75% of global knowledge workers using generative AI, blanket bans feel out of touch with current practice. 1 11 12

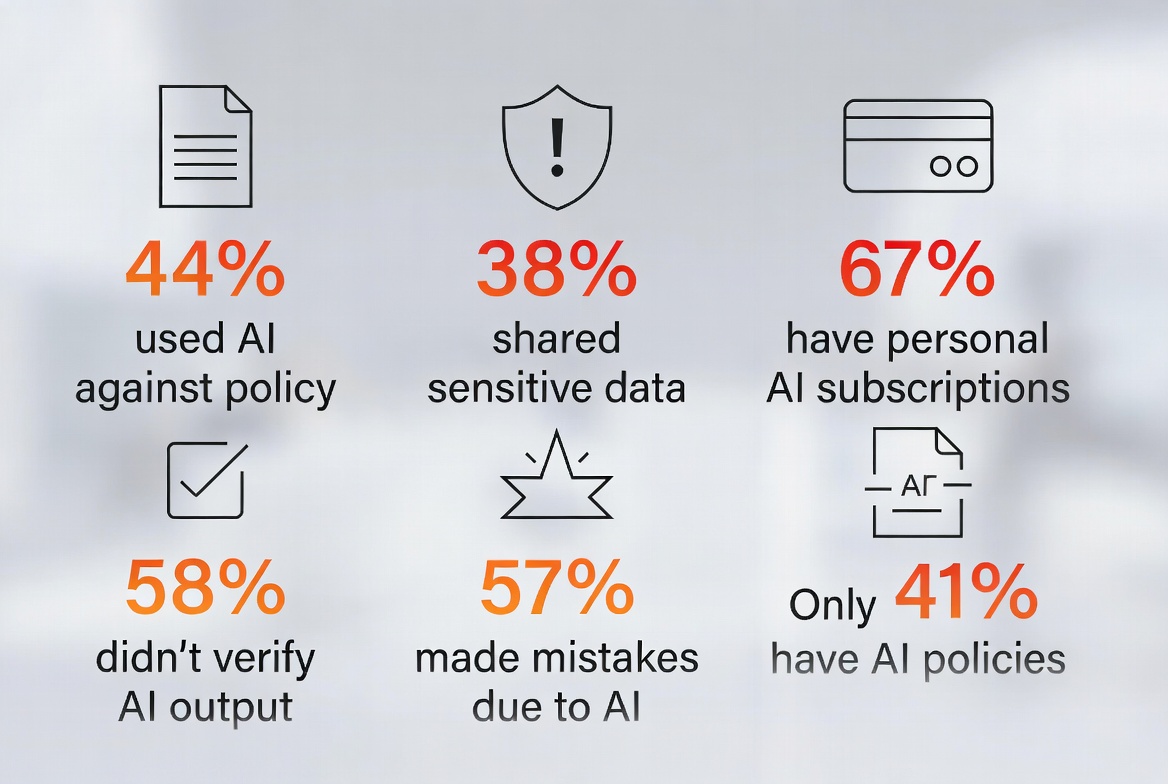

Research shows the scope of the problem is alarming:

| The Adoption Reality | The Policy Gap |

|---|---|

| 67% have personal AI subscriptions for work | Only 41% report their org has a GenAI policy |

| The Violation Problem | The Data Exposure Risk |

| 44% have used AI against company policies | 38% shared sensitive work info without permission |

| The Quality Issue | The Consequences |

| 58% relied on AI output without verifying | 57% have made mistakes due to AI use |

| The Data Breach Reality | The Scale of Exposure |

| ~50% uploaded sensitive company data | 1 in 5 UK companies experienced AI data leakage |

The bottom line: If you ban AI tools without providing alternatives, you're not stopping usage — you're just losing visibility and control. 9

When developers use AI tools in secret, you lose all the safeguards you'd have with managed adoption. 10 Without visibility, you don't know what sensitive information is leaving your organization—developers using unauthorized tools may be copying proprietary code into public chat interfaces for debugging, pasting customer data into AI tools for help writing queries, sharing API keys or credentials when asking for help with authentication, or uploading internal documentation to get AI explanations. 10 In fact, one study found that 1 in 5 UK companies experienced data leakage because of employees using generative AI. 8

Organizations also face serious data governance issues when employees use unauthorized AI tools. Sensitive information may be stored indefinitely on third-party servers without proper data processing agreements, data may be used to train AI models (potentially exposing proprietary information to competitors), data exfiltration can occur when AI providers store or reuse shared information, and compliance violations can result from data being processed in jurisdictions without adequate data protection, potentially violating GDPR, CCPA, HIPAA, or other regulations. 8 11 12 Enterprise agreements can include strict retention controls and no-training commitments, though some providers may retain minimal data for abuse/fraud detection. Personal accounts typically don't offer these protections. 4 5 6

When AI usage is secret, there's no process for identifying AI-generated code during review, applying extra scrutiny to code from AI assistants, running additional security scans on AI-written code, or training reviewers on what to look for. AI-generated code slips through review processes designed for human-written code. 10 Meanwhile, managed AI adoption can include IDE plugins that scan AI suggestions for vulnerabilities before insertion, CI/CD gates that flag AI-generated code for extra review, SAST rules tuned for patterns common in AI-generated code, dependency and license scanners for AI-suggested packages, and central AI proxy with redaction, logging, allowlisting, and provider controls. Shadow AI users get none of this—they're on their own. 10

Organizations with official AI programs can train developers on how to prompt AI securely (avoiding sensitive data in prompts), when to use versus avoid AI assistance, how to review AI-generated code for security issues, and which tools are approved and why. Shadow AI users figure it out on their own, often making preventable mistakes. 12 13

The risks of shadow AI aren't theoretical—they're playing out in organizations right now. A developer at a fintech company, frustrated by slow IT approvals, used ChatGPT to debug an authentication issue. They pasted their code into ChatGPT—including an API key for their production database. 10 That API key was now in the conversation history. Enterprise/API tiers disable training on business data by default and provide retention controls, but the developer used a personal consumer account where prompts may be used to improve models unless they opt out; retention policies also differ by tier. 4 5 The company only discovered the leak months later during a security audit. By then, the API key had been rotated multiple times, but the original credential had been exposed for weeks.

At a startup building a proprietary SaaS product, a developer working late to meet a deadline used GitHub Copilot (on a personal account, against company policy) to generate code for a critical feature. Copilot suggested code that closely resembled a GPL-licensed open source project. The developer, not understanding licensing implications, merged it. Months later, during due diligence for a funding round, the legal team discovered incompatible open-source licensing in their proprietary codebase. They had to rewrite significant portions of the product, delaying their raise by months. While exact reproduction rates are debated, there is a documented risk of inadvertently introducing code that conflicts with your licensing requirements; SCA tooling in CI can mitigate this. 3

A healthcare company had strict policies against using unapproved AI tools due to HIPAA compliance requirements. A developer, stuck on a complex SQL query involving patient records, used an AI chatbot with real patient data (names removed, but other identifying information intact). 10 This likely constituted a HIPAA violation and a reportable incident, even without evidence of malicious access, because partially de-identified patient data can still be PHI. Proper de-identification under HIPAA requires removing specific identifiers or expert determination. 7

In January 2025, the AI chatbot platform DeepSeek suffered a significant breach where more than 1 million records—including chat logs, API keys, and backend system details—were exposed due to misconfigured infrastructure. 11 While the breach was not caused by employee uploads, it highlighted how easily proprietary data can be compromised when AI tools operate outside formal governance. The incident triggered regulatory scrutiny and reputational fallout, underscoring the need for centralized oversight and secure AI environments. 11

The common thread in all these cases: The organizations had banned AI tools, but developers used them anyway, creating risks that could have been managed with proper policies and oversight.

Before we can solve shadow AI, we need to understand why it happens. 10 Consider the speed mismatch: Employees discovered ChatGPT in November 2022 and were using it productively by December. Meanwhile, IT departments formed AI governance committees in January 2023, delivered preliminary findings in June, recommended pilot programs in September, and might approve the first official AI tool sometime in 2025. 12 14 The business can't wait years for IT to figure out an AI strategy. They have quarterly targets, customer demands, and competitive pressures. Every day without AI assistance is a day they fall further behind. 10

Traditional IT governance was designed for a world where new technologies emerged every few years, not every few weeks. Approval processes that take six months to evaluate a new database are completely inadequate for AI tools that evolve monthly. 10 While IT evaluates whether GPT-4 meets security requirements, employees are already using GPT-4o, Claude 3.5, and three other models IT hasn't even heard of. Governance can't keep up with innovation, so innovation routes around governance. 10

Here's what IT leaders don't want to admit: employees using shadow AI are often dramatically more productive than those following official channels. 10 The marketing manager using Claude to write content is producing 5x more than her peers. The developer using Cursor with GPT-4 is shipping features twice as fast. The analyst using ChatGPT for data analysis is finding insights others miss. When the productivity gain is that dramatic, employees will find a way—using personal devices, personal accounts, and personal judgment. 10

Shadow AI thrives due to several behavioral and organizational factors: 11

Think about other times organizations tried to ban popular tools. Personal email (Gmail, Hotmail) was banned by many companies in the 2000s—employees used them anyway to send work files. USB drives were banned for security reasons—employees used them anyway to transfer files between systems. Cloud storage (Dropbox, Google Drive) was banned in many enterprises—employees used it anyway to share large files.

In every case, outright bans failed. What worked was understanding why employees wanted the tool (legitimate productivity needs), providing a secure alternative (enterprise email, secure file transfer, approved cloud storage), implementing security controls (DLP, encryption, access policies), and training employees on proper usage. 10 AI coding tools will follow the same pattern.

There are scenarios where a time-bound, scoped prohibition is appropriate—classified or highly regulated environments pending a vetted solution, until an allowlisted provider with DPA/SCCs, logging, and egress controls is live, for specific high-risk code paths (e.g., cryptography primitives, auth core), or during active incident response involving model/provider compromise. However, any temporary ban should be paired with a clear 30/60/90-day plan for managed adoption. 11

Compare two organizational responses:

❌ The Wrong Approach: "We're banning all AI coding tools. Developers caught using them will face disciplinary action."

What happens: Developers use them anyway, usage goes underground, security teams have no visibility, no training or guardrails exist, and risks increase. 10

✅ The Right Approach: "We recognize AI coding tools offer productivity benefits. We're implementing a managed AI program with approved tools, security controls, training, and oversight." 12 13

What happens: Developers use approved tools, usage is visible and monitored, security controls are in place, training reduces risky behavior, and risks are managed.

Instead of prohibition, organizations should pursue managed adoption with comprehensive governance and enablement strategies. 11 This requires six core components:

1. Establish Central AI Governance — Create a dedicated AI transformation organization or office to govern AI strategy, architecture, tools, trust, and standards across the enterprise. 11 This includes an AI Technology Review Board (a cross-functional governance body to evaluate, approve, and monitor AI platforms, tools, and systems), clear AI governance policies to prevent data loss and ensure compliance, and an AI registry maintaining a living inventory of sanctioned models, data connectors, and owners. 10 11

2. Provide Approved Tools — Select AI coding assistants that meet your security requirements: enterprise agreements with no-training commitments and strict retention controls, clear data handling terms (DPA/SCCs, subprocessors, data residency), SOC 2/ISO 27001 compliance and recent attestations, self-hosted or virtual private options for sensitive environments, and terms of service that meet your legal requirements. Offer a "choose your own AI" approach within approved boundaries—a curated menu of vetted GenAI tools tailored to different roles and use cases (e.g., copilots for developers, content tools for marketing, agentic platforms for builders) where employees can choose their preferred tool but IT retains oversight. 11

3. Implement Technical Controls — Deploy a centralized AI egress proxy with provider/model allowlisting, prompt/response logging, PII/secret redaction and context filtering, and rate limits with usage analytics. Add IDE-integrated security scanning and secrets detection for AI suggestions. Set up CI/CD gates that flag AI-influenced diffs for enhanced review, enforce SAST/DAST on high-risk changes, and run dependency and license scanning (SCA). Implement code provenance tagging (e.g., commit trailers or metadata) to mark AI-assisted changes, DLP for clipboard/paste events and outbound traffic, package policy enforcement and version pinning, and automated discovery platforms to identify and catalog AI systems, scan for shadow AI tools, and close visibility gaps. 11

4. Create Safe Experimentation Environments — Stand up an AI labs function providing a sandboxed environment where teams can safely explore new AI platforms and tools, test ideas, and validate use cases before scaling. Labs reduce the need for unsanctioned experimentation while accelerating innovation. 11 AI sandboxes should include contained environments for testing and validating models, synthetic or anonymized data, freedom within defined boundaries, and a fast-track process to promote successful experiments to production. 10

5. Train and Set Expectations — Promote employee education and awareness by training teams on AI risk, trusted AI principles, security best practices, and data handling, 11 including scenario-based examples that clarify gray areas. Educate developers on which tools are approved and how to access them, what data can and cannot be shared with AI, how to prompt AI for secure code, how to review AI-generated code, and when AI is appropriate versus prohibited. Once internal policies are established, communicate widely what generative AI tools they are free to use, for what purposes, and with what kinds of data. Be as specific as possible about what is safe use and what is not. 11 Publish an AI Acceptable Use Policy and a clear exception/opt-out process.

6. Foster a Culture of Transparency and Innovation — Make it safe for employees to share how they're using GenAI. Reward responsible innovation and engage teams early in piloting new tools or workflows. 11 Consider an AI amnesty program: declare a period (e.g., 30 days) where employees can register their shadow AI usage without consequences. They share what tools they're using, what data they're processing, what business problems they're solving, and what productivity gains they're seeing. In return, help them transition to sanctioned alternatives or fast-track approval for critical tools. 10 Establish a Citizen AI Developer Program to create citizen AI developers who can evaluate and recommend new AI tools, train colleagues on approved AI usage, act as liaisons between departments and IT, and help shape practical, usable policies. 10

Monitor the effectiveness of your managed adoption program by tracking percentage of developers using approved tools, percentage of code diffs with AI-influence tags, vulnerability density in AI versus non-AI diffs, percentage of prompts redacted/blocked by the proxy, incidents attributable to AI-generated code and mean time to remediate, license policy violations caught in CI, AI tool discovery through inventory systems, and employee satisfaction with approved AI tools. 11

A practical 30/60/90-day implementation plan helps organizations move from policy to practice:

30 days: Publish AI Acceptable Use Policy (v1), select interim approved tools and tiers (enterprise/API with no-training), stand up an AI proxy with redaction, logging, and allowlisting, pilot with 2–3 teams and enable IDE scanning, secrets detection, and SCA in CI, and launch amnesty program for shadow AI disclosure. 10

60 days: Expand to 30–50% of developers and add CI gates for AI-flagged diffs, roll out reviewer training and an AI code review checklist, execute DPAs/SCCs and verify provider SOC 2/ISO attestations, define metrics and start weekly reporting, and establish AI labs for safe experimentation. 11

90 days: Complete org-wide rollout with approved tools as default, enforce dependency and package policies, conduct quarterly risk reviews and publish program KPIs and learnings, and deploy automated AI inventory and monitoring systems. 11

When reviewing code that may be AI-generated, check that:

While shadow AI contains inherent risks, organizations should recognize and harness its potential benefits. 11 Shadow AI drives accelerated experimentation where employees rapidly test new ideas and workflows, surfacing high-impact use cases organically. It increases engagement and empowerment—when employees feel trusted to explore new tools, it fuels motivation, creativity, and retention. Shadow AI provides real-time insight into system gaps, as the tools people choose reveal friction points in existing AI systems and highlight where modernization is needed. It enables tool discovery for future investment, surfaces emerging tools that can inform enterprise-scale adoption and innovation strategy, and drives organic upskilling as hands-on use of various GenAI tools builds prompting, automation, and critical thinking skills. Shadow AI fosters grassroots innovation where employees often discover novel use cases that top-down strategies might overlook, encourages cross-functional collaboration in problem-solving teams, and pressure-tests enterprise AI strategy by revealing where official AI systems, tools, policies, or platforms are falling short. By recognizing and channeling these benefits into sanctioned programs, organizations can turn shadow AI from a liability into a strategic advantage. 11

However, ignoring the shadow AI problem means accepting significant costs:

We've established three key points: AI coding tools offer real productivity benefits (Chapter 1), they also introduce serious security risks (Chapter 2), and banning them doesn't work and often makes things worse (this chapter). The solution is clear: managed adoption with proper policies, technical controls, and training. Organizations that respond with rigid control will stifle innovation. Those that respond with clear strategy, empowered oversight, and curated choice will unlock AI's full potential—safely and at scale. 11

In the next chapter, we'll explore The Productivity Paradox—understanding when AI actually helps versus when it hurts, so you can set realistic expectations and make smart decisions about where to deploy these tools.

Before moving on, make sure you understand these key takeaways:

[1] GitLab (2024) – Global DevSecOps Report: AI in Software Development

[2] Pearce et al. (2021) – Asleep at the Keyboard? Assessing the Security of GitHub Copilot's Code Contributions

[3] arXiv (2023) – Do Users Write More Insecure Code with AI Assistants?

[4] OpenAI – API Data Usage Policies

[5] OpenAI – ChatGPT Enterprise Privacy and Data Control

[6] GitHub – Copilot for Business

[7] U.S. HHS – Guidance Regarding Methods for De-identification of Protected Health Information

[8] CIO.com (2025) – Shadow AI: The Hidden Agents Beyond Traditional Governance

[9] Dell Technologies / Forbes (2025) – Powering Possibilities in Healthcare with AI and Edge Computing

[10] Knight, Michael (2025) – Shadow AI: The Problem What Teams Don't Know Can Hurt Them

[11] KPMG (August 2025) – Shadow AI is Already Here: Take Control, Reduce Risk, and Unleash Innovation

Mark this chapter as finished to continue

Mark this chapter as finished to continue