Fundamentals

Invisible AI

Conversational AI

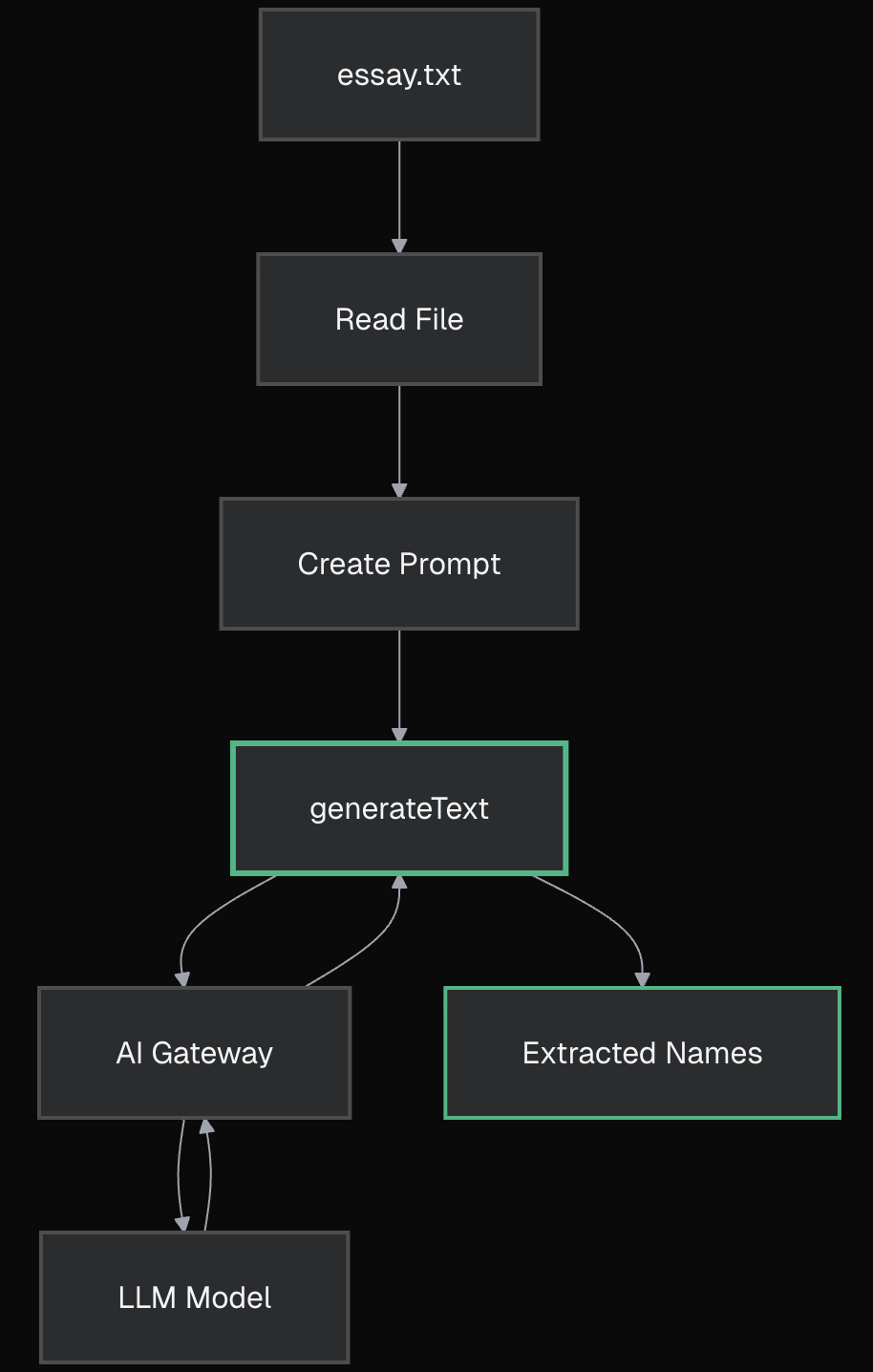

Now that you've learned some theory and got your project setup, it's time to ship some code. You will build and run a script that extracts info from text using the AI SDK's generateText method. This will show you firsthand how tweaking your prompt or swapping models instantly changes your results.

Open your project code. Look for app/(1-extraction)/extraction.ts and essay.txt.

Update the contents of extraction.ts with this code that extracts names from the essay:

typescript

From your terminal, run:

bash

You'll see the AI extracting names from the essay. Your first feature works. Nice!

text

Verification Task

Check

app/(1-extraction)/essay.txtand use search (Cmd+F/Ctrl+F) to verify the names. Did the AI nail it or miss some?

LLMs process text as 'tokens' (~4 chars each). Understanding tokens helps optimize speed and cost:

- Visualize tokenization at tiktokenizer.vercel.app

- Count tokens programmatically with

tiktoken:pnpm add tiktoken- Monitor usage to estimate costs and stay within context limits

- Try pasting different prompts into Tiktokenizer to see surprising patterns (spaces matter!).

Running the script once is just the start. Working with LLMs is all about iteration. Play with the prompt and see for yourself:

text

pnpm extractiontext

Available OpenAI Models via Vercel AI Gateway:

openai/gpt-5- Most capable for complex reasoningopenai/gpt-4.1- Fast & cost-effective for most tasks (non-reasoning)openai/gpt-5-nano- Fastest for simple tasksopenai/gpt-4.1-mini- Previous generation, still capable (non-reasoning)

Available Anthropic Models via Vercel AI Gateway:

anthropic/claude-sonnet-4- Strong reasoning & analysis

Available Google Models via Vercel AI Gateway:

google/gemini-2.5-pro- Advanced multimodal capabilitiesgoogle/gemini-2.5-flash- Fast responses, good balancegoogle/gemini-2.5-flash-lite- Lightweight & quickgoogle/gemini-2.0-flash- Previous flash version

See the Vercel AI Gateway models for pricing & details, or the OpenAI models documentation for OpenAI-specific info.

Simply swap the model string to experiment - the AI SDK handles all the provider differences for you!

This simple extraction pattern powers serious production features like:

It's the same pattern: send content + instructions, process the response.

generateText = your basic AI workhorseprompt = what guides the AImodel = power/speed/cost tradeoffTroubleshooting Guide

You've built your first AI script and experienced the power of prompt engineering. In the next lesson, you'll learn about different model types and their performance characteristics. Understanding when to use fast models vs reasoning models is crucial for building AI features that deliver the right user experience.

After that, you'll be ready for "invisible AI" - behind-the-scenes features that enhance your product's UX using the patterns you've learned here.

Mark this chapter as finished to continue

Mark this chapter as finished to continue