Fundamentals

Invisible AI

Conversational AI

Now that you understand why Invisible AI matters you'll put it in practice with Classification - turning messy unstructured text into clean, categorized data. You will use the AI SDK's generateObject with Zod schemas to classify unstructured text into predefined categories.

Project Context

Continuing with the same codebase from AI SDK Dev Setup. For this section, you'll find the classification example files in the

app/(2-classification)/directory.

Imagine getting flooded with user feedback, support tickets, or GitHub issues. It's a goldmine of information, but it's a messy constant firehose! Manually reading and categorizing everything is slow, tedious, and doesn't scale.

This is where using LLMs to classify shines. We can teach an LLM our desired categories and have it automatically sort incoming text.

Take a look at the support_requests.json file from our project (app/(2-classification)/support_requests.json). It contains typical user messages like:

json

Our goal is to automatically assign a category (like account issues or product issues) to each request.

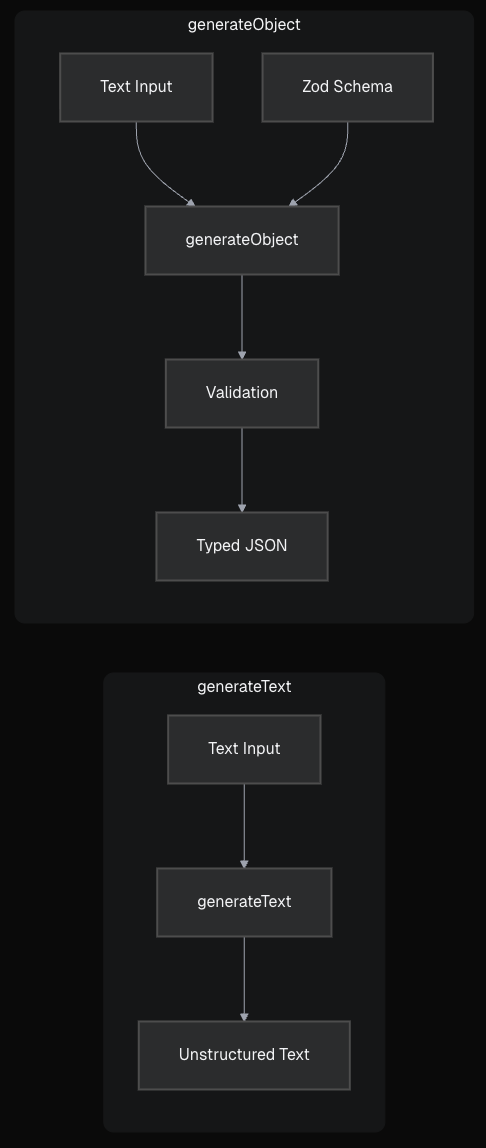

generateObjectIn the last lesson we used generateObject to generate structured data from information provided in our prompt. It's perfect for this because that's exactly what we want: structured output. Specifically, we want each request paired with its category.

To make this work reliably, we need to tell the LLM exactly what we want to generate. That's where Zod comes in.

Zod is a TypeScript schema definitions and validation library that gives you a powerful and relatively simple way to provide the LLM with the shape of data that is expected in its output. It's like TypeScript types, but with runtime validation - perfect for ensuring AI outputs match your expectations.

Here's a short example of a Zod schema:

typescript

This schema describes the shape of a name object and the type of data expected for its properties. Zod provides many different data types and structures as well as the ability to define custom types.

With Zod you can define a schema that includes the request text and the available categories.

z.enumOpen app/(2-classification)/classification.ts. The file already has imports set up and TODOs to guide you.

The first step is to define our categories and the structure we want for each classified item using Zod. The z.enum() function is ideal for defining a fixed set of possible categories.

Replace the first TODO with this schema definition:

typescript

Now replace the remaining TODOs with the generateObject implementation:

typescript

Code Breakdown:

generateObject, Zod, and our JSON data.z.object and z.enum.model, prompt, schema, and importantly, output: 'array'.result.object.Time to see it in action! In your terminal (project root):

bash

You should get a clean JSON array like this:

json

Success! Structured, usable data instead of messy text. Now you can route billing questions to finance, bugs to engineering, and feature requests to product automatically.

That's classification power.

Let's make this even more useful. What if we wanted the AI to estimate the urgency of each request? Easy! Just add it to the schema:

typescript

Run pnpm classification again. You'll now see the urgency field added to each object, with the AI making its best guess (e.g., "high" for API integration help, "medium" for the export feature issue).

.describe()Now, let's throw a curveball: support_requests_multilanguage.json. This file has requests in Spanish, German, Chinese, etc.

Can your setup handle it?

Modify classification.ts:

typescript

import supportRequests from './support_requests_multilanguage.json';language: z.string() to the classificationSchema.pnpm classification.You'll see the AI detects the languages, but maybe gives you codes ("ES"). We want full names ("Spanish"). This requires more instructions to make it precise. You might think to update the prompt itself, but we are going to update the schema to better indicate what data is expected.

Solution: Use .describe() to prompt the model for exactly what you want for any specific key!

Update the language field in your schema to include a description of what is expected in the field:

typescript

Run the script one more time. You should now see full language names. Clean, full language names, thanks to our more specific schema instructions.

What if the AI gets it wrong?

The AI SDK uses your Zod schema to validate the LLM's output. If the model returns a category not in your

z.enumlist (e.g., "sales_inquiry" instead of "enterprise_sales") or fails other schema rules,generateObjectwill throw a validation error. This prevents unexpected data structures from breaking your application. You might need to refine your prompt, schema descriptions, or use a more capable model if validation fails often.

Iteration is the name of the game. Add fields to your schema incrementally. Use .describe() to fine-tune the output for specific fields when the default isn't perfect. This schema-driven approach keeps your AI interactions predictable and robust.

As you build more sophisticated classification systems, you'll encounter edge cases and ambiguous inputs. The next callout provides a structured approach to refining your schemas when basic descriptions aren't enough.

💡 Refining Classification Accuracy

Getting inconsistent or ambiguous categories? Try asking an AI assistant to help refine your approach:

text

Real-world support requests often belong to multiple categories simultaneously.

Try these steps:

Challenge:

How does the model's accuracy change with multi-label classification? Does it tend to assign too many or too few categories? How might you optimize the prompt for better multi-label results?

Build a streaming moderation pipeline that classifies, scores, and routes messages at enterprise scale.

Try these steps:

Challenge:

Add Redis caching for repeated messages and implement rate limiting middleware.

typescript

Enhance your schema-validation skills with these resources:

z.enum([...categories]) to define your classification labels.output: 'array' to classify multiple items at once..describe() on schema fields to guide the model's output format (like getting full language names).This technique can be used to automate workflows like ticket routing, content moderation, and feedback analysis.

You've successfully used generateObject to classify text. Now, let's apply similar techniques to another powerful Invisible AI feature: summarization. In the next lesson you'll build a tool that creates concise summaries from longer text inputs, helping users quickly grasp key information. We'll also touch on displaying this neatly in your user interface (UI).

Mark this chapter as finished to continue

Mark this chapter as finished to continue